Memory models

Akka provides an in-memory, durable store for stateful data. Stateful data can be scoped to a single agent, or made available system-wide. Stateful data is persisted in an embedded event store that tracks incremental state changes, which enables recovery of system state (resilience) to its last known modification. State is automatically sharded and rebalanced across Akka nodes running in a cluster to support elastic scaling to terabytes of memory. State can also be replicated across regions for failover and disaster recovery.

Memory in Akka is structured around entities. An entity holds a particular slice of application state and evolves it over time according to a defined state model. These state models determine how state is stored, updated, and replicated. This approach provides consistency and durability across the system, even in the face of failure. Agents, for example, manage their memory through entities, whether for short-lived context or persistent behavior.

Akka uses an architectural pattern called Event Sourcing. Following this pattern, all changes to an application’s state are stored as a sequence of immutable events. Instead of saving the current state directly, Akka stores the history of what happened to it. The current state is derived by replaying those events. Memory is saved in an event journal managed by Akka, with events recorded both sequentially and via periodic snapshots for faster recovery.

| Event | Amount | Balance |

|---|---|---|

AccountOpened |

$0 |

$0 |

FundsDeposited |

+$1,000 |

$1,000 |

FundsDeposited |

+$500 |

$1,500 |

FundsWithdrawn |

-$200 |

$1,300 |

FundsDeposited |

+$300 |

$1,600 |

FundsWithdrawn |

-$400 |

$1,200 |

Akka uses the Event Sourcing pattern for many internal stateful operations. For example, Workflows rely on Event Sourcing to record each step as it progresses. This provides a complete history of execution, which can be useful for auditing, debugging, or recovery.

| Step | Action | Workflow State |

|---|---|---|

1 |

Withdraw from Account A |

$500 withdrawn from Account A |

2 |

Reserve funds |

Funds marked for transfer |

3 |

Deposit to Account B |

$500 added to Account B |

4 |

Confirm transfer |

Transfer marked as complete |

5 |

Send notification |

Recipient notified |

6 |

Save audit record |

Transfer logged |

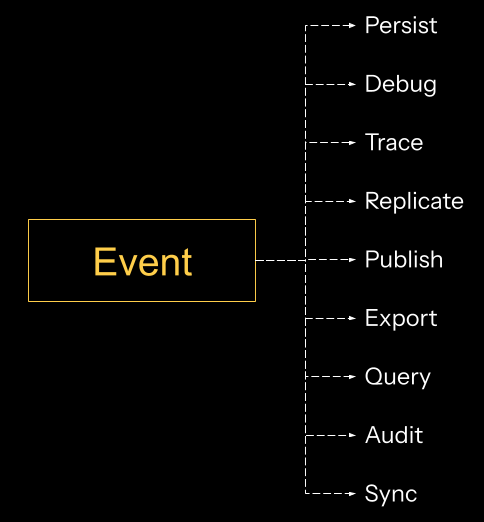

Tracking all state changes as a sequence of events allows you to create agentic systems that are also event-driven architectures. Akka provides event subscription, state subscription, brokerless messaging, and event replication, which makes it possible to chain together services that consume, monitor, synchronize, or aggregate the state of another service.

Memory is managed automatically by the Agent component. By default, each agent has session memory that stores interaction history and context using an Event Sourced Entity. This memory is durable and retained across invocations. If needed, memory behavior can be customized or disabled through configuration.

Entity state models

Entities are used to store the data defined in the domain model. They follow a specific state model chosen by the developer. The state model determines how the data is organized and persisted. Entities have data fields that can be simple or primitive types like numbers, strings, booleans, and characters. The fields can be more complex, which allows custom types to be stored in Akka.

Entities have operations that can change their state. These operations are triggered asynchronously and implemented via methods that return Effect. Operations allow entities to be dynamic and reflect the most up-to-date information and this all gets wired together for you.

Akka offers two state models: Event Sourced Entity and Key Value Entity. Event Sourced Entities build their state incrementally by storing each update as an event, while Key Value Entities store their entire state as a single entry in a Key/Value store. To replicate state across clusters and regions, Akka uses specific conflict resolution strategies for each state model.

Event Sourced Entities, Key Value Entities and Workflows replicate their state by default. If you deploy your Service to a Project that spans multiple regions the state is replicated for you with no extra work to be done. By default, any region can read the data, and will do so from a local store within the region, but only the primary region will be able to perform writes. To make this easier, Akka will forward writes to the appropriate region.

To understand more about regions and distribution see Deployment model.

Identity

Each Entity instance has a unique id that distinguishes it from others. The id can have multiple parts, such as an address, serial number, or customer number. Akka handles concurrency for Entity instances by processing requests sequentially, one after the other, within the boundaries of a transaction. Akka proactively manages state, eliminating the need for techniques like lazy loading. For each state model, Akka uses a specific back-end data store, which cannot be configured.

Origin

Stateful entities in Akka have a concept of location, that is region, and are designed to span regions and replicate their data. For more information about regions see region in the Akka deployment model.

Entities call the region they were created in their origin and keep track of it throughout their lifetime. This allows Akka to simplify some aspects of distributed state.

By default, most entities will only allow their origin region to change their state. To make this easier, Akka will automatically route state-changing operations to the origin region. This routing is asynchronous and durable, meaning network partitions will not stop the write from being queued. This gives you a read-anywhere model out of the box that automatically routes writes appropriately.

The Event Sourced state model

The Event Sourced state model captures changes to data by storing events in a journal. The current entity state is derived from the events. Interested parties can read the journal and transform the stream of events into read models (Views) or perform business actions based on events.

A client sends a request to an Endpoint . The request is handled in the Endpoint which decides to send a command to the appropriate Event sourced entity

, its identity is either determined from the request or by logic in the Endpoint.

The Event sourced entity processes the command . This command requires updating the Event sourced entity state. To update the state it emits events describing the state change. Akka stores these events in the event store

.

After successfully storing the events, the event sourced entity updates its state through its event handlers .

The business logic also describes the reply as the commands effect which is passed back to the Endpoint . The Endpoint replies to the client when the reply is processed

.

| Event sourced entities express state changes as events that get applied to update the state. |

The Key Value state model

In the Key Value state model, only the current state of the Entity is persisted - its value. Akka caches the state to minimize data store access. Interested parties can subscribe to state changes emitted by a Key Value Entity and perform business actions based on those state changes.

A client sends a request to an Endpoint . The request is handled in the Endpoint which decides to send a command to the appropriate Key Value entity

, its identity is either determined from the request or by logic in the Endpoint.

The Key Value entity processes the command . This command requires updating the Key Value entity state. To persist the new state of the Key Value entity, it returns an effect. Akka updates the full state in its persistent data store

.

The business logic also describes the reply as the commands effect which is passed back to the Endpoint . The Endpoint replies to the client when the reply is processed

.

| Key Value entities capture state as one single unit, they do not express state changes in events. |

State models and replication

Event Sourced entities are replicated between all regions in an Akka project by default. This allows for a multi-reader capability, with writes automatically routed to the correct region based on the origin of the entity.

In order to have multi-writer (or write anywhere) capabilities you must implement a conflict-free replicated data type (CRDT) for your Event Sourced Entity. This allows data to be shared across multiple instances of an entity and is eventually consistent to provide high availability with low latency. The underlying CRDT semantics allow replicated Event Sourced Entity instances to update their state independently and concurrently and without coordination. The state changes will always converge without conflicts, but note that with the state being eventually consistent, reading the current data may return an out-of-date value.

| Although Key Value Entities are planned to support a Last Writer Wins (LWW) mechanism, this feature is not yet available. |