Using Confluent Cloud as Kafka service

Akka connects to Confluent Cloud Kafka services via TLS, authenticating using SASL (Simple Authentication and Security Layer) PLAIN.

Steps to connect to a Confluent Cloud Kafka broker

Take the following steps to configure access to your Confluent Cloud Kafka broker for your Akka project.

-

Log in to Confluent Cloud and select the cluster Akka should connect to. Create a new cluster if you don’t have one already.

-

Create an API key for authentication

-

Select "API Keys"

-

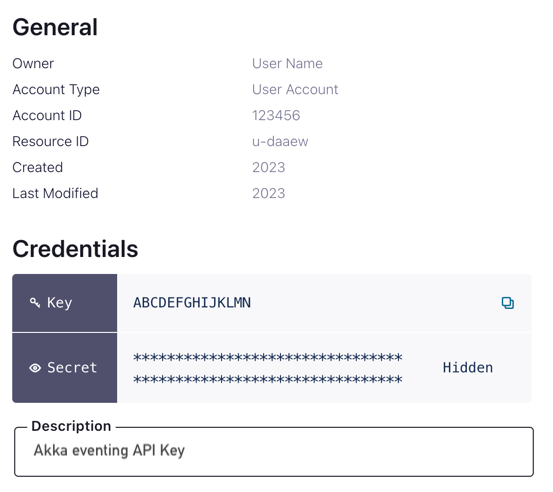

Choose the API key scope for development use, or proper setup with ACLs. The API key’s "Key" is the username, the "Secret" acts as password.

-

When the API key was created, your browser downloads an

api-key-… .txtfile with the API key details.

-

-

Ensure you are on the correct Akka project

akka config get-project -

Copy the API secret and store it in an Akka secret (e.g. called

confluent-api-secret)akka secret create generic confluent-api-secret --literal secret=<the API key secret> -

Select "Cluster Settings" and copy the bootstrap server address shown in the "Endpoints" box.

-

Use

akka projects configto set the broker details. Set the username using the provided API key’s "Key" and service URI according to the connection information.akka projects config set broker \ --broker-service kafka \ --broker-auth plain \ --broker-user <API_KEY> \ --broker-password-secret confluent-api-secret/secret \ --broker-bootstrap-servers <bootstrap server address> \

The broker-password-secret refer to the name of the Akka secret created earlier rather than the actual API key secret.

An optional description can be added with the parameter --description to provide additional notes about the broker.

The broker config can be inspected using:

akka projects config get brokerCreate a topic

To create a topic, you can either use the Confluent Cloud user interface, or the Confluent CLI.

- Browser

-

-

Open Confluent Cloud.

-

Go to your cluster

-

Go to the Topics page

-

Use the Add Topic button

-

Fill in the topic name, select the number of partitions, and use the Create with defaults button

You can now use the topic to connect with Akka.

-

- Confluent Cloud CLI

-

confluent kafka topic create \ <topic name> \ --partitions 3 \ --replication 2You can now use the topic to connect with Akka.

Delivery characteristics

When your application consumes messages from Kafka, it will try to deliver messages to your service in 'at-least-once' fashion while preserving order.

Kafka partitions are consumed independently. When passing messages to a certain entity or using them to update a view row by specifying the id as the Cloud Event ce-subject attribute on the message, the same id must be used to partition the topic to guarantee that the messages are processed in order in the entity or view. Ordering is not guaranteed for messages arriving on different Kafka partitions.

| Correct partitioning is especially important for topics that stream directly into views and transform the updates: when messages for the same subject id are spread over different transactions, they may read stale data and lose updates. |

To achieve at-least-once delivery, messages that are not acknowledged will be redelivered. This means redeliveries of 'older' messages may arrive behind fresh deliveries of 'newer' messages. The first delivery of each message is always in-order, though.

When publishing messages to Kafka from Akka, the ce-subject attribute, if present, is used as the Kafka partition key for the message.