404 Not Found

Not Found

The requested URL was not found on this server.

Apache/2.4.63 (Ubuntu) Server at doc.akka.io Port 443

404 Not Found

Not Found

The requested URL was not found on this server.

Apache/2.4.63 (Ubuntu) Server at doc.akka.io Port 443

- [Akka](../index.html)

- [Developing](index.html)

- [Setup and configuration](setup-and-configuration/index.html)

- [Access Control Lists (ACLs)](access-control.html)

# Access Control Lists (ACLs)

The simplest access control that the Akka offers is through Access Control Lists (ACLs). ACLs allow you to

specify lists of what can access your services, at multiple granularity. For example, you can configure a method that

initiates a payment on a payment service to only accept requests from the shopping cart service. You can also control

whether services or methods can be invoked from the Internet.

For a conceptual introduction, see [Access control lists](../concepts/acls.html) in the **Concepts** section.

## Principals

A principal in Akka is an abstract concept that represents anything that can make or be the source of a request.

Principals that are currently supported by Akka include other services, and the internet. Services are identified

by the service name chosen when deployed. Akka uses mutual TLS (mTLS) to associate requests with one or more principals.

Note that requests that have the internet principal are requests that Akka has identified as coming through the Akka

ingress route. This is identified by mTLS, however it does not imply that mTLS has been

used to connect to the ingress from the client in the internet. These are separate hops. To configure mTLS from

internet clients, see [TLS certificates](../operations/tls-certificates.html).

## Configuring ACLs

Akka SDK ACLs consist of two lists of principal matchers. One to allow to invoke a method, and the other to deny to

invoke a method. For a request to be allowed, at least one principal associated with a request must be matched by at

least one principal matcher in the allow list, and no principals associated with the request may match any principal

matchers in the deny list.

An ACL can be configured at the class level, or at the method level. If an ACL is configured at the class level it

applies to all methods in the class. Unless it’s overridden by an ACL added at method level.

Here is an example of an ACL added at the class level on an HTTP Endpoint:

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Acl(allow = @Acl.Matcher(service = "service-a"))

@HttpEndpoint("/user")

public class UserEndpoint extends AbstractHttpEndpoint { // (1)

// ...

}

```

The above ACL only allows incoming traffic from `service-a`. Meaning that only a service named `service-a` deployed on that same project will be able to make calls to this endpoint. This rule is applied to all methods in this HTTP Endpoint.

This rule can be overridden by an ACL on a method level. Here is an example ACL on a method that overrides the class level ACL:

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Acl(allow = @Acl.Matcher(service = "service-a"))

@HttpEndpoint("/user")

public class UserEndpoint extends AbstractHttpEndpoint { // (1)

// ...

@Post

@Acl(allow = @Acl.Matcher(service = "service-b"))

public Done createUser(CreateUser create) {

//... create user logic

return Done.getInstance();

}

}

```

Note that an ACL defined on a method completely overrides an ACL defined at class level. It does not add to

it.

You can combine `allow` and `deny` rules. In the following example, access is open to all other services, except `service-b`.

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Acl(allow = @Acl.Matcher(service = "*"), deny = @Acl.Matcher(service = "service-b"))

```

To allow all traffic:

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Acl(allow = @Acl.Matcher(principal = Acl.Principal.ALL))

```

To allow only traffic from the internet:

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Acl(allow = @Acl.Matcher(principal = Acl.Principal.INTERNET))

```

To allow traffic from `service-a` and `service-b`:

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Acl(allow = { @Acl.Matcher(service = "service-a"), @Acl.Matcher(service = "service-b") })

```

To block all traffic, an ACL with no allows can be configured:

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Acl(allow = {})

```

## Default ACL

If no ACLs are defined at all, Akka will deny requests from both other services and the internet to all components of

an Akka service.

| | The endpoint in the [getting started sample](../getting-started/author-your-first-service.html) has a very permissive ACL that opens access to public internet requests. This allows for easy try out and testing. For production usage, make sure to add appropriate ACL restrictions. |

### Customizing the deny code

When a request is denied, by default a 403 `Forbbiden`, is sent. The code that is returned when a request is denied can be customised using the `deny_code` property.

For example, to make Akka reply with 404, `Not Found`:

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Acl(allow = @Acl.Matcher(service = "service-a"),

denyCode = 404)

@HttpEndpoint("/user")

public class UserEndpoint extends AbstractHttpEndpoint { // (1)

// ...

}

```

Similar to allow and deny rules, deny codes defined at class level are applied to all methods in the component, but

can be overwritten on a per method base.

## Backoffice and self invocations

Invocations of methods from the same service, or from via the backoffice proxy that is available to developers, are always

permitted, regardless of what ACLs are defined on them.

| | The `akka service proxy` command creates an HTTP proxy that forwards all

traffic to a service. This allows you to interact with the service as if it was running locally. |

## Programmatically accessing principals

The current principal associated with a request can be accessed through the `RequestContext`.

| | Endpoints are stateless and each request is served by a new Endpoint instance. Therefore, the `RequestContext` is always a new instance and is associated with the request currently being handled. |

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@HttpEndpoint("/user")

public class UserEndpoint extends AbstractHttpEndpoint { // (1)

// ...

var principals = requestContext().getPrincipals();

}

```

| **1** | Let your endpoint extend [AbstractHttpEndpoint](_attachments/api/akka/javasdk/http/AbstractHttpEndpoint.html) to get access to the request specific `RequestContext` through `requestContext()`. |

You can access the current Principals through method `RequestContext.getPrincipals()`

If the request came from another service, the `Principals.getLocalService()` method will return a non-empty `Optional` containing the name of the service that made the request. Akka guarantees that this field will only be

present from an authenticated principal, it can’t be spoofed.

Further, you can use `Principals.isInternet`, `Principals.isSelf` or `Principals.isBackoffice` to verify if the request

was made from the Internet, from the current service or from the Backoffice API respectively. Backoffice requests are

those made using the `akka services proxy` command, they are authenticated and authorized to ensure only developers

of your project can make them.

[UserEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/UserEndpoint.java)

```java

@Get

public String checkingPrincipals() {

var principals = requestContext().getPrincipals();

if (principals.isInternet()) {

return "accessed from the Internet";

} else if (principals.isSelf()) {

return "accessed from Self (internal call from current service)";

} else if (principals.isBackoffice()) {

return "accessed from Backoffice API";

} else {

return "accessed from another service: " + principals.getLocalService();

}

}

```

## Local development with ACLs

When running locally, ACLs are enabled by default. You can disable the local ACL checks by configuring the following settings in the `application.conf` file:

src/main/resources/application.conf

```conf

akka.javasdk.dev-mode.acl.enabled = false

```

Alternatively, start with:

```shell

mvn compile exec:java -Dakka.javasdk.dev-mode.acl.enabled=false

```

Note that the setting above does not apply to integration tests. See below for how to disable ACLs in integration tests.

### Service identification

If running multiple services in local development, you may want to run with ACLs enabled to verify that they work for

cross-service communication.

Let’s consider the existence of two distinct services called `shopping-cart` and `payment`.

The payment service only accepts request from the shopping cart service and has an ACL defined as

such:

[PaymentEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/main/java/com/example/acl/PaymentEndpoint.java)

```java

@Acl(allow = @Acl.Matcher(service = "shopping-cart"))

@HttpEndpoint("/payments")

public class PaymentEndpoint {

//...

}

```

During development, if you want to make calls to the payment service from inside the shopping cart service, then the

shopping cart service needs to identify itself as `shopping-cart` (as per PaymentEndpoint’s ACL). When running

locally, the services identify themselves with the name used in the `artifactId` tag in its `pom.xml` file. This value can be

overwritten in the project’s `application.conf` file by defining a maven property named `akka.javasdk.dev-mode

.service-name`.

The `application.conf` needs to be located in the project’s main resources directory, i.e.: `src/main/resources`.

src/main/resources/application.conf

```conf

akka.javasdk.dev-mode.service-name=shopping-cart

```

| | This property is only applicable when running the services on your local machine. When deployed, the service name

is the one used to create the service and should also match the ACL definition, i.e.: `shopping-cart`. |

If you want to simulate calls to the payment endpoint and pretend that the calls are

coming from the shopping cart service, you can add the header `impersonate-service` to your requests, for example:

```bash

curl -i localhost:9000/payments/{cart-id}/check-transaction \

--header "impersonate-service: shopping-cart"

```

Note that in local development, the services don’t actually authenticate with each other, they only pass their identity in a header. It is assumed in local development that a client can be trusted to set that header correctly.

### Running unit tests

In the generated unit test testkits, the ACLs are ignored.

### Running integration tests

ACLs are enabled by default when running integration tests.

ACL rules will be applied whenever a call is made using testkit’s `HttpClient`. Those calls are interpreted as

originating from the internet. You can disable the ACL checks by overriding the `testKitSettings()` method.

[UserEndpointIntegrationTest.java](https://github.com/akka/akka-sdk/blob/main/samples/doc-snippets/src/test/java/com/example/acl/UserEndpointIntegrationTest.java)

```java

public class UserEndpointIntegrationTest extends TestKitSupport {

@Override

protected TestKit.Settings testKitSettings() {

return super.testKitSettings().withAclDisabled();

}

}

```

Calls made through the `ComponentClient` are internal to the service and therefore no ACL rule is applied.

[Errors and failures](errors-and-failures.html) [JSON Web Tokens (JWT)](auth-with-jwts.html)

404 Not Found

Not Found

The requested URL was not found on this server.

Apache/2.4.63 (Ubuntu) Server at doc.akka.io Port 443

404 Not Found

Not Found

The requested URL was not found on this server.

Apache/2.4.63 (Ubuntu) Server at doc.akka.io Port 443

- [Akka](../index.html)

- [Developing](index.html)

- [Setup and configuration](setup-and-configuration/index.html)

- [JSON Web Tokens (JWT)](auth-with-jwts.html)

# JSON Web Tokens (JWT)

This section describes the practical aspects of using JSON Web Tokens (JWTs). If you are not sure what JWTs are, how they work or how to generate them, see [JSON Web Tokens](../reference/jwts.html) first.

Akka’s JWT support is configured by placing annotations in your endpoints at the class level or method level.

## Authentication

Akka can validate the signature of JWT tokens provided in an Authorization header to grant access to your endpoints. The generation of tokens is not provided by Akka. In [https://jwt.io/](https://jwt.io/) you can find a simple way to generate tokens to start testing your services.

### Bearer token validation

If you want to validate the bearer token of a request, you need to annotate your endpoint with a `@JWT` setting with `JWT.JwtMethodMode.BEARER_TOKEN` and you can add an issuer claim. Like this:

[HelloJwtEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/endpoint-jwt/src/main/java/hellojwt/api/HelloJwtEndpoint.java)

```java

import akka.javasdk.annotations.JWT;

import akka.javasdk.annotations.http.HttpEndpoint;

@HttpEndpoint("/hello")

@JWT(validate = JWT.JwtMethodMode.BEARER_TOKEN, bearerTokenIssuers = "my-issuer") // (1)

public class HelloJwtEndpoint extends AbstractHttpEndpoint {

}

```

| **1** | Validate the Bearer is present in the `Authorization` header and authorize only if the claim `iss` in the payload of this token is `my-issuer`. |

Requests are only allowed if they have a bearer token that can be validated by one of the configured keys for the service, all other requests will be rejected. The bearer token must be supplied with requests using the `Authorization` header:

Authorization: Bearer eyJhbGciOiJIUzI1NiIsImtpZCI6ImtleTEifQ.eyJpc3MiOiJteS1pc3N1ZXIifQ.-MLcf1-kB_1OQIZdy9_wYiFZcMOHsHOE8aJryS1tWq4 You can check in [https://jwt.io/](https://jwt.io/) that this token contains the claim in the payload `iss: my-issuer`.

| | It is recommended that `bearerTokenIssuers` contains the issuer that you use in your JWT key configuration. See [https://doc.akka.io/security/jwts.html](https://doc.akka.io/security/jwts.html). Otherwise, any services with a trusted key can impersonate the issuer. |

### Configuring JWT at class level or method level

The above examples show how to configure a JWT token on a class or method level. When the annotation is present on both endpoint class and a method, the configuration on the method overrides the class configuration for that method.

### Using more claims

Akka can be configured to automatically require and validate other claims than the issuer. Multiple `StaticClaim` can be declared and environment variables are supported on the `values` field. A `StaticClaim` can be defined both at class and method level. The provided claims will be used when validating against the bearer token.

```java

@JWT(validate = JWT.JwtMethodMode.BEARER_TOKEN,

bearerTokenIssuers = "my-issuer",

staticClaims = {

@JWT.StaticClaim(claim = "role", values = {"admin", "editor"}), // (1)

@JWT.StaticClaim(claim = "aud", values = "${ENV}.akka.io")}) // (2)

```

| **1** | When declaring multiple values for the same claim, **all** of them will be required when validating the request. |

| **2** | The required value of the `aud` claim includes the value of environment variable `ENV` |

See `akka service deploy -h` for details on how to set environment variables when deploying a service.

| | For specifying an issuer claim (i.e. "iss"), you should still use the `bearerTokenIssuers` and not static claims. |

#### Configuring claims with a pattern

Claims can also be defined using a pattern. This is useful when the value of the claim is not completely known in advance, but it can still be validated against a regular expression. See some examples below:

```java

@JWT(validate = JWT.JwtMethodMode.BEARER_TOKEN,

bearerTokenIssuers = "my-issuer",

staticClaims = {

@JWT.StaticClaim(claim = "role", pattern = "^(admin|editor)$"), // (1)

@JWT.StaticClaim(claim = "sub", pattern = // (2)

"^[0-9a-fA-F]{8}-[0-9a-fA-F]{4}-[0-9a-fA-F]{4}-[0-9a-fA-F]{4}-[0-9a-fA-F]{12}$"),

@JWT.StaticClaim(claim = "name", pattern = "^\\S+$") // (3)

})

```

| **1** | Claim "role" must have one of 2 values: `admin` or `editor`. |

| **2** | Claim "sub" must be a valid UUID. |

| **3** | Claim "name" must be not empty. |

If the JWT token claim is an array of values, the token will be considered valid if at least one of the claim values matches the pattern. Otherwise, the request is rejected.

| | A claim can be defined with a `values` or a `pattern`, but not both. |

#### Multiple issuers

Multiple issuers may be allowed, by setting multiple `bearer_token_issuer` values:

```java

@JWT(

validate = JWT.JwtMethodMode.BEARER_TOKEN,

bearerTokenIssuers = { "my-issuer", "my-issuer2" },

staticClaims = @JWT.StaticClaim(claim = "sub", values = "my-subject")

)

```

The token extracted from the bearer token must have one of the two issuers defined in the annotation.

Akka will place the claims from the validated token in the [RequestContext](_attachments/api/akka/javasdk/http/RequestContext.html), so you can access them from your service via `getJwtClaims()`. The `RequestContext` is accessed by letting the endpoint extend [AbstractHttpEndpoint](_attachments/api/akka/javasdk/http/AbstractHttpEndpoint.html) which provides the method `requestContext()`, so you can retrieve the JWT claims like this:

[HelloJwtEndpoint.java](https://github.com/akka/akka-sdk/blob/main/samples/endpoint-jwt/src/main/java/hellojwt/api/HelloJwtEndpoint.java)

```java

import akka.javasdk.annotations.http.HttpEndpoint;

public class HelloJwtEndpoint extends AbstractHttpEndpoint {

@JWT(

validate = JWT.JwtMethodMode.BEARER_TOKEN,

bearerTokenIssuers = { "my-issuer", "my-issuer2" },

staticClaims = @JWT.StaticClaim(claim = "sub", values = "my-subject")

)

@Get("/claims")

public String helloClaims() {

var claims = requestContext().getJwtClaims(); // (1)

var issuer = claims.issuer().get(); // (2)

var sub = claims.subject().get(); // (2)

return "issuer: " + issuer + ", subject: " + sub;

}

}

```

| **1** | Access the claims from the request context. |

| **2** | Note that while calling `Optional#get()` is generally a bad practice, here we know the claims must be present given the `@JWT` configuration. |

## Running locally with JWTs enabled

When running locally, by default, a dev key with id `dev` is configured for use. This key uses the JWT `none` signing algorithm, which means the signature of the received JWT tokens is not validated. Therefore, when calling an endpoint with a bearer token, only the presence and values of the claims are validated.

## JWTs when running integration tests

When running integration tests, JWTs will still be enforced but its signature will not be validated, similarly to what is described above for when running locally. Thus, when making calls in the context of integration testing, make sure to inject a proper token with the required claims, as shown below:

[HelloJwtIntegrationTest.java](https://github.com/akka/akka-sdk/blob/main/samples/endpoint-jwt/src/test/java/hellojwt/api/HelloJwtIntegrationTest.java)

```java

@Test

public void shouldReturnIssuerAndSubject() throws JsonProcessingException {

String bearerToken = bearerTokenWith(Map.of("iss", "my-issuer", "sub", "my-subject")); // (1)

StrictResponse call = httpClient

.GET("/hello/claims")

.addHeader("Authorization", "Bearer " + bearerToken) // (2)

.responseBodyAs(String.class)

.invoke();

assertThat(call.body()).isEqualTo("issuer: my-issuer, subject: my-subject");

}

private String bearerTokenWith(Map claims) throws JsonProcessingException {

// setting algorithm to none

String header = Base64.getEncoder()

.encodeToString(

"""

{

"alg": "none"

}

""".getBytes()

); // (3)

byte[] jsonClaims = JsonSupport.getObjectMapper().writeValueAsBytes(claims);

String payload = Base64.getEncoder().encodeToString(jsonClaims);

// no validation is done for integration tests, thus no signature required

return header + "." + payload; // (4)

}

```

| **1** | Use a helper method to create a JWT token with 2 claims: issuer and subject. |

| **2** | Inject the bearer token as header with the key `Authorization`. |

| **3** | Use static `Base64` encoding of `{ "alg": "none" }`. |

| **4** | Note that you do not need to provide a signature, thus the token has only 2 parts, header and payload. |

[Access Control Lists (ACLs)](access-control.html) [Run a service locally](running-locally.html)

- [Akka](../index.html)

- [Developing](index.html)

- [Integrations](integrations/index.html)

- [Message broker integrations](message-brokers.html)

# Message broker integrations

Akka offers built-in message broker integrations for use with the Akka Consumer and Producer component. These built-in integrations are available for Google Cloud Pub/Sub and hosted Kafka services. For other broker technologies, Java client libraries can be used directly to implement publishing of messages.

## Using built-in integrations

For the built-in technologies, Akka decouples the broker configuration from the implementation of the consumer or producer. The topic name is referenced independently of the broker technology, as demonstrated in [Consume from a message broker Topic](consuming-producing.html#consume_topic) and [Producing to a message broker Topic](consuming-producing.html#topic_producing).

All connection details are managed at the Akka project level. For configuration instructions, refer to [Configure message brokers](../operations/projects/message-brokers.html).

The Akka SDK testkit has built-in support for simulating message brokers. See [Testing the Integration](consuming-producing.html#testing) for more details. For running locally with a broker, refer to [running a service with broker support](running-locally.html#_local_broker_support).

## Producing to other broker technologies

Other message broker technologies can be integrated into an Akka service by utilizing their respective client libraries. Additionally, the [Akka libraries Alpakka project](https://doc.akka.io/libraries/alpakka/current) provides Akka-native solutions for integrating various services.

We continuously evaluate additional integrations for potential built-in support in Akka. If you have specific requirements, please contact us at [support@akka.io](mailto:support@akka.io).

## See also

- [Configure message brokers](../operations/projects/message-brokers.html)

- `akka projects config` commands

- [Akka integrations through Alpakka](https://doc.akka.io/libraries/alpakka/current)

[Component and service calls](component-and-service-calls.html) [Streaming](streaming.html)

- [Akka](../index.html)

- [Developing](index.html)

- [Integrations](integrations/index.html)

- [Streaming](streaming.html)

# Streaming

In many cases, Akka takes care of streaming and is using end-to-end backpressure automatically. Akka will also use the event journal or message brokers as durable buffers to decouple producers and consumers. You would typically only have to implement the functions to operate on the stream elements. For example:

- Views are updated asynchronously from a stream of events. You implement the update handler, which is invoked for each event.

- Views can stream the query results, and the receiver demands the pace.

- Consumers process a stream of events. You implement a handler to process each event. Same approach when the source is an entity within the service, another service, or a message broker topic.

- Consumers can produce events to other services or publish to a message broker topic. The downstream consumer or publisher defines the pace.

## Using Akka Streams

Sometimes, the built-in streaming capabilities mentioned above are not enough for what you need, and then you can use Akka Streams. A few examples where Akka Streams would be a good solution:

- Streaming from [Endpoints](http-endpoints.html#_advanced_http_requests_and_responses)

- For each event in a [Consumer](consuming-producing.html) you need to materialize a finite stream to perform some actions in a streaming way instead of composing those actions with `CompletionStage` operations.

- the stream can be run from a [Consumer](consuming-producing.html) event handler

- e.g. for each event, download a file from AWS S3, unzip, for each row send a command to entity

- e.g. for each event, stream file from AWS S3 to Azure Blob

- Streams that are continuously running and are executed per service instance.

- the stream can be started from the [Setup](setup-and-dependency-injection.html#_service_lifecycle)

- e.g. integration with AWS SQS

For running Akka Streams you need a so-called materializer, which can be injected as a constructor parameter of the component, see [dependency injection](setup-and-dependency-injection.html#_dependency_injection).

You find more information about Akka Streams in the [Akka libraries documentation](https://doc.akka.io/libraries/akka-core/current/stream/stream-introduction.html). Many streaming connectors are provided by [Alpakka](https://doc.akka.io/libraries/alpakka/current/).

[Message broker integrations](message-brokers.html) [Retrieval-Augmented Generation (RAG)](rag.html)

- [Akka](../index.html)

- [Developing](index.html)

- [Setup and configuration](setup-and-configuration/index.html)

- [Run a service locally](running-locally.html)

# Run a service locally

Running a service locally is helpful to test and debug. The following sections provide commands for starting and stopping a single service locally.

## Prerequisites

In order to run your service locally, you’ll need to have the following prerequisites:

- Java 21, we recommend [Eclipse Adoptium](https://adoptium.net/marketplace/)

- [Apache Maven](https://maven.apache.org/install.html) version 3.9 or later

- `curl` command-line tool

## Starting your service

As an example, we will use the [Shopping Cart](../getting-started/shopping-cart/build-and-deploy-shopping-cart.html) sample.

To start your service locally, run the following command from the root of your project:

```command

mvn compile exec:java

```

## Invoking your service

After you start the service it will accept invocations on `localhost:9000`. You can use [cURL](https://curl.se/) in another shell to invoke your service.

### Using cURL

Add an item to the shopping cart:

```command

curl -i -XPUT -H "Content-Type: application/json" localhost:9000/carts/123/item -d '

{"productId":"akka-tshirt", "name":"Akka Tshirt", "quantity": 10}'

```

Get cart state:

```command

curl localhost:9000/carts/123

```

## Shutting down the service

Use `Ctrl+c` to shut down the service.

## Run from IntelliJ

The [getting started sample](../getting-started/author-your-first-service.html) and other samples include a run configuration for IntelliJ. In the toolbar you should see:

This is a Maven run configuration for `mvn compile exec:java`. You can also run this with the debugger and set breakpoints in the components.

## Local console

The local console gives you insights of the services that you are running locally.

To run the console you need to install:

- [Akka CLI](../operations/cli/installation.html)

Start the console with the following command from a separate terminal window:

```command

akka local console

```

Open [http://localhost:9889/](http://localhost:9889/)

Start one or more services as described in [Starting your service](about:blank#_starting_your_service) and they will show up in the console. You can restart the services without restarting the console.

## Running a service with persistence enabled

By default, when running locally, persistence is disabled. This means the Akka Runtime will use an in-memory data store for the state of your services. This is useful for local development since it allows you to quickly start and stop your service without having to worry about cleaning the database.

However, if you want to run your service with persistence enabled to keep the data when restarting, you can configure

the service in `application.conf` with `akka.javasdk.dev-mode.persistence.enabled=true` or as a system property when starting the service locally.

```command

mvn compile exec:java -Dakka.javasdk.dev-mode.persistence.enabled=true

```

To clean the local database look for `db.mv.db` file in the root of your project and delete it.

## Running a service with broker support

By default, when running locally, broker support is disabled. When running a service that declares consumers or producers locally, you need to configure the broker with property `akka.javasdk.dev-mode.eventing.support=kafka` in `application.conf` or as a system property when starting the service.

```command

mvn compile exec:java -Dakka.javasdk.dev-mode.eventing.support=kafka

```

For Google PubSub Emulator, use `akka.javasdk.dev-mode.eventing.support=google-pubsub-emulator`.

| | For Kafka, the local Kafka broker is expected to be available on `localhost:9092`. For Google PubSub, the emulator is expected to be available on `localhost:8085`. |

## Running multiple services locally

A typical application is composed of one or more services deployed to the same Akka project. When deployed under the same project, two different services can make [calls to each other](component-and-service-calls.html) or [subscribe to each other’s event streams](consuming-producing.html) by simply using their logical names.

The same can be done on your local machine by configuring the services to run on different ports. The services

will discover each other by name and will be able to interact.

The default port is 9000, and only one of the services can run on the default port. The other service must be configured with another port.

This port is configured in `akka.javasdk.dev-mode.http-port` property in the `src/main/resources/application.conf` file.

```xml

akka.javasdk.dev-mode.http-port=9001

```

With both services configured, we can start them independently by running `mvn compile exec:java` in two separate terminals.

## Running a local cluster

For testing clustering behavior and high availability scenarios, you can run your Akka service as a local cluster with multiple nodes. This allows you to simulate a distributed environment on your local machine.

### Database requirement

To run in cluster mode, you need a shared database that all nodes can connect to. The `local-nodeX.conf` files configure the application to connect to a PostgreSQL database.

Before starting your cluster nodes, you must start a local PostgreSQL database. We recommend using Docker Compose for this purpose. Create a `docker-compose.yml` file with the following configuration:

```yaml

services:

postgres-db:

image: postgres:17

ports:

- 5432:5432

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

healthcheck:

test: ["CMD", "pg_isready", "-q", "-d", "postgres", "-U", "postgres"]

interval: 5s

retries: 5

start_period: 5s

timeout: 5s

```

Start the database with:

```command

docker compose up -d

```

### Cluster configuration

You can create a local cluster with up to 3 nodes. Each node requires its own configuration file and will run on a different HTTP port.

To start each node, use the following commands in separate terminal windows:

```command

# Node 1 (runs on port 9000)

mvn compile exec:java -Dconfig.resource=local-node1.conf

# Node 2 (runs on port 9001)

mvn compile exec:java -Dconfig.resource=local-node2.conf

# Node 3 (runs on port 9002)

mvn compile exec:java -Dconfig.resource=local-node3.conf

```

### Port assignment

The cluster nodes use sequential port numbering based on your configured HTTP port:

- **Node 1**: Uses the standard HTTP port (default: 9000)

- **Node 2**: Uses standard port + 1 (default: 9001)

- **Node 3**: Uses standard port + 2 (default: 9002)

If you have configured a custom HTTP port in your `application.conf` (for example, 9010), the cluster nodes will use:

- **Node 1**: 9010

- **Node 2**: 9011

- **Node 3**: 9012

This ensures that each node in the cluster has its own unique port while maintaining a predictable numbering scheme.

[JSON Web Tokens (JWT)](auth-with-jwts.html) [AI model provider configuration](model-provider-details.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Organizations](index.html)

- [Regions](regions.html)

# Regions

## Overview

Akka projects are deployed to specific regions, which are tied to the backend infrastructure Akka uses to support projects. Regions:

- Are linked to a particular cloud provider (e.g., GCP, AWS)

- Exist in specific geographic locations (e.g., Europe, North America)

- May have unique performance characteristics

- Can provide varying levels of isolation (e.g., dedicated plans offer isolated regions)

### For Organizations

When an organization is created, it is assigned access to one or more regions. A region must be specified when creating a new project within the organization.

For example, if the organization `myorg` has access to the region `aws-us-east-`2, you would create a project in that region using the following command:

```command

akka project new myproject --organization myorg --region aws-us-east-2

```

### Finding Available Regions

If you’re unsure which regions your organization has access to, there are two options:

1. **Error Prompt**: If you omit the `--region` flag when creating a new project, Akka will inform you of the available regions in the error message. For instance:

```command

$ akka project new myproject --organization myorg

--region is a required flag. The following regions are available: [aws-us-east-2]

```

2. **List Regions Command**: You can list the regions directly using the following command:

```command

akka regions list --organization myorg

```

Example output:

```command

NAME ORGANIZATION

aws-us-east-2 db805ff5-4fbd-4442-ab56-6e6a9a3c200a

```

## Requesting new regions

By default organizations are limited to which regions they can use, particularly for trial organizations. If you would like access to other regions, you can use the **?** in the upper right of the [Akka Console](https://console.akka.io/) to request additional regions via the **Contact support** menu item.

## BYOC and self-hosted regions

Akka also supports Bring Your Own Cloud (BYOC) meaning that we can run regions in your AWS, Azure, or Google Cloud account. These are not available to trial users.

These regions work just like any other regions and are exclusive to your workloads.

To get a BYOC region setup you can [Contact Us](https://www.akka.io/contact).

To learn more about self-hosted Akka regions please To get a BYOC region setup you can [Submit a request](https://www.akka.io/contact) for more information.

## See also

- `akka regions` commands

- `akka project regions` commands

[Manage users](manage-users.html) [Billing](billing.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Organizations](index.html)

- [Billing](billing.html)

# Billing

Akka provides detailed billing information to help organizations monitor and manage the operational costs associated with their projects. Users with the **billing-admin** role can view:

- **Cost breakdown** for each project in the organization.

- **Month-to-date aggregate cost**.

- **Cost forecast** based on current and projected usage across all projects.

Billing data is accessible only to users with the **billing-admin** role. For more information on assigning this role, see the [Assigning the billing-admin Role](about:blank#assigning_billing_admin) section below.

## Billing Interface

For **billing-admin** users, a billing icon appears in the [Akka Console’s](https://console.akka.io/) side navigation. Clicking this icon opens the billing interface, where users can select the billing month and organization to view detailed billing data.

If a user is a billing admin for multiple organizations, they can switch between organizations in the billing UI.

In the billing UI:

- **Month-to-date costs** and a **cost forecast** for the current month are displayed in the upper-right corner.

- Billing data for each project within the selected organization is broken down into the following categories:

- **Network Data Transfer**: Charges for data transfer across all services, measured in GB.

- **Data Operations**: Total read and write operations for all services.

- **Data Persistence**: Total amount of data persisted during the month, measured in GB-Hours.

These are all metered at the project, region, service scope and you can see the totals across organization, project, region, or service as you choose.

For more details on pricing, refer to [Akka Pricing](https://akka.io/pricing#).

## Assigning the billing-admin Role

The organization superuser can assign the billing-admin role in one of two ways:

1. **Invite a User**: Use the following command to invite a user to the organization and assign the billing-admin role:

```command

akka organizations invitations create --organization \

--email --role billing-admin

```

2. **Assign an Existing User**: If the user is already a member, the superuser can assign the billing-admin role directly:

```command

akka organization users add-binding --organization \

--email --role billing-admin

```

For more details on managing users and their roles, see the [Managing organization users](manage-users.html) section.

## See also

- [Managing organization users](manage-users.html)

- [Akka Pricing](https://akka.io/pricing#)

[Regions](regions.html) [Projects](../projects/index.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Organizations](index.html)

# Organizations

An *Organization* in Akka is the root of the management hierarchy and serves as a container for all *Projects* where *Services* are deployed. It provides the context in which users operate, both in the *Akka Console* and *Akka CLI*.

To switch between organizations, you must specify the target organization’s context.

## Key Concepts

- **User Membership**: A user can belong to multiple organizations, but membership does not automatically grant access to the organization’s projects.

- **Regions**: Each organization has access to specific regions. Projects created within the organization are located in one or more of these regions.

- **Billing**: Billing is handled at the organization level, with all costs collected and paid per organization.

- **Role-Based Access**: Membership and project access within an organization are managed through role bindings.

## Details

- **Organization Administrator**: The first user of an organization is an Organization Administrator, who can invite or add users with different roles.

- **Project Ownership**: Each project is owned by a single organization. Users must ensure that their projects are associated with the correct organization.

- **Region Assignment**: Projects created for an organization are assigned to one or more of the organization’s available regions.

## Usage

You can determine which organizations the current user is a member of using the following command:

```command

akka organizations list

```

Example output:

```none

NAME ID ROLES

acme 1a4a9d5d-1234-5678-910a-9c8fb3700da7 superuser

```

| | You can refer to the organization in `akka` commands using either the "friendly name" or the "ID" with the `--organization` flag. See the page on [managing organization users](manage-users.html) to find more, including the use of organization roles. |

## Topics

- [Managing organization users](manage-users.html)

- [Regions](regions.html)

- [Billing](billing.html)

[Akka Automated Operations](../akka-platform.html) [Manage users](manage-users.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Organizations](index.html)

- [Manage users](manage-users.html)

# Managing organization users

Access to an organization is controlled by assigning roles to users. The available roles are: **superuser**, **project-admin**, **billing-admin**, and **member**.

| | Akka supports access management via Single Sign-on (SSO) through the *OpenID Connect* standard. For details, check [OpenID Connect Setup](../../reference/security/oidc-setup.html). |

| Permission | superuser | project-admin | billing-admin | member |

| --- | --- | --- | --- | --- |

| View organization users | ✅ | ✅ | ✅ | ✅ |

| Manage organization users | ✅ | ❌ | ❌ | ❌ |

| Create projects | ✅ | ✅ | ❌ | ❌ |

| Assign regions to projects | ✅ | ✅ | ❌ | ❌ |

| View all projects | ✅ | ❌ | ❌ | ❌ |

| Manage project users | ✅ | ❌ | ❌ | ❌ |

| Delete projects | ✅ | ❌ | ❌ | ❌ |

| All other project/service operations | ❌ | ❌ | ❌ | ❌ |

| View organization billing data | ❌ | ❌ | ✅ | ❌ |

| | Project-level operations are accessed via project-specific roles. A superuser has a subset of project permissions, including the ability to assign roles (including to themselves). When a user creates a project, they are automatically granted admin access to it. (see [granting project roles](../projects/manage-project-access.html)) |

The **member** role allows project admins to add users to their projects without needing to invite them to the organization.

## Listing role bindings

You can list role bindings within an organization using the following command:

```command

akka organization users list-bindings --organization

```

Example output:

```none

ROLE BINDING ID ROLE USERNAME EMAIL NAME

fd21044c-b973-4220-8f65-0f7d317bb23b superuser jane.citizen jane.citizen@example.com Jane Citizen

120b75b6-6b53-4ebb-b23b-2272be974966 member john.smith john.smith@example.com John Smith

```

## Granting a role

| | When using *OpenID Connect* (OIDC), see [OIDC setup](../../reference/security/oidc-setup.html#assigning_organization_level_roles). |

You can grant a role to a user in two ways:

### 1. Invite a User by Email

Send an email invitation with the following command:

```command

akka organizations invitations create --organization \

--email --role

```

The user will receive an email to join the organization. Once accepted, the role binding will be created.

### 2. Add a Role Directly

If the user is already a member, you can assign roles directly:

- By e-mail:

akka organization users add-binding --organization \

--email --role

- By username:

akka organizations users add-binding --organization \

--username --role

## Deleting a role binding

To delete a role binding, first list the users to get the role binding ID. Then, use the following command:

```command

akka organizations users delete-binding --organization \

--id

```

## Managing invitations

View outstanding invitations:

```command

akka organizations invitations list --organization

```

Example output:

```none

EMAIL ROLE

jane.citizen@example.com member

```

Invitations expire after 7 days, but you can cancel them manually:

```command

akka organizations invitations cancel --organization \

--email

```

To resend an invitation, cancel the previous one and reissue the invite.

## See also

- [Managing project users](../projects/manage-project-access.html)

- [OpenID Connect Setup](../../reference/security/oidc-setup.html)

- `akka organizations users` commands

- `akka organizations invitations` commands

[Organizations](index.html) [Regions](regions.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Regions](index.html)

# Regions

Projects in Akka can span across regions with data automatically replicated between all the regions. This increases availability as the regions can either be separate cloud / geographic regions or can be separate logical regions within the same cloud / geographic region. This gives you a high level of control for managing failure domains or fault boundaries in your applications. This is sometimes referred to as blast radius control.

[Regions](../organizations/regions.html) are specified in the project configuration. All services in the project are deployed to all regions. One of the regions will be specified as the primary region. The primary region indicates the source from which region resources (services, routes, secrets, etc.) should be replicated from. By default the primary region is the first one added to the project at deployment time.

Additionally, the primary region also indicates where primary data copies should reside in stateful components like Event Sourced Entities, Key Value Entities or Workflow when using the `pinned-region` primary selection mode.

| | Regions appear at two different scopes in Akka. The first is at the [Organizations](../organizations/index.html) scope. This conveys which regions are available to your organization. The second is at the project scope, which conveys which regions a specific project is bound to. |

To see what regions have been configured for your project, you can run:

```command

akka project regions list

```

## Adding a region to a project

A region can be added to a project if the organization that owns the project has access to that region. To see which regions your organization has access to, run the `akka regions list` command:

```command

akka regions list --organization my-organization

```

To add one of these regions to your project, run:

```command

akka project regions add gcp-us-east1

```

When you deploy a service it will run in all regions of the project. When you add a region to a project the existing services will automatically start in the new region.

| | Project region assignment is restricted to users that have `project-admin` or `superuser` role in the encompassing organization to which the project belongs, as documented in [Manage users](../organizations/manage-users.html). |

### Selecting primary for stateful components

Stateful components like Event Sourced Entities and Workflows can be replicated to other regions. For each stateful component instance there is a primary region, which handles all write requests. Read requests can be served from any region. See [Event Sourced Entity replication](../../sdk/event-sourced-entities.html#_replication), [Key Value Entity replication](../../sdk/key-value-entities.html#_replication) and [Workflow replication](../../sdk/workflows.html#_replication) for more information about read and write requests.

There are two operational choices for deciding where the primary is located:

- **pinned-region** mode - one region is defined as the primary for the project, and all stateful component instances will use that region as primary

- **request-region** mode - the primary is selected by each individual component instance based on where the write requests occur

| | Before changing the primary selection mode, make sure that you understand and follow the steps described in the [How to](about:blank#_how_to). |

The pinned-region mode is used by default. To use request-region mode you need to deploy the service with a [service descriptor](../services/deploy-service.html#apply):

```yaml

name: my-service

service:

image: my-container-uri/container-name:tag-name

replication:

mode: replicated-read

replicatedRead:

primarySelectionMode: request-region

```

When using request-region mode, all regions must be available when the first write request is made to an entity or when switching primary region by handling a write request for an entity in another region than the currently selected primary region.

It is possible to switch between pinned-region and request-region mode, but this should only be done with careful consideration of the consequences. For example, when changing the primary, not all updates may have been replicated and the new primary may not be fully up to date. This is why there is a third mode. This is a read-only mode for all regions, which causes all write requests to be rejected. This can be used as an intermediate stage to ensure that all updates have been replicated before the primary is changed.

To use this read-only mode for all regions you set `primarySelectionMode` to `none` in the service descriptor:

```yaml

name: my-service

service:

image: my-container-uri/container-name:tag-name

replication:

mode: replicated-read

replicatedRead:

primarySelectionMode: none

```

To use the pinned-region primary selection mode again you set `pinned-region` in the service descriptor:

```yaml

name: my-service

service:

image: my-container-uri/container-name:tag-name

replication:

mode: replicated-read

replicatedRead:

primarySelectionMode: pinned-region

```

## Setting the primary region of a project

Changing the primary region of a project is how you control failover or migration in Akka.

| | The primary region of a project is also the region that will be used as primary for stateful components in the pinned-region selection mode. Changing primary should only be done with careful consideration of the consequences, and it is recommended to first change to the read-only mode in all regions. See [Selecting primary for stateful components](about:blank#selecting-primary). |

| | Before changing the primary region, make sure that you understand and follow the steps described in the [How to](about:blank#_how_to). |

To change the primary region of a project run:

```command

akka project regions set-primary gcp-us-east1

```

| | It may be necessary to clear the region cache when running the `akka` command on other machines before this change will be picked up. This can be done by running `akka config clear-cache`. |

## Managing resources in a multi region project

Akka projects are built to span regions. To accomplish this, Akka considers resources in two ways.

### Global resources

In an Akka project, services, routes, secrets, and observability configuration are all *global resources* in that they will deploy to all regions that the project is bound to.

The underlying replication mechanism is that when resources are deployed they first deploy to the primary region. Then a background process will asynchronously copy them to the remain regions. This background synchronization process is eventually consistent.

The `list` and `get` commands for multi-region resources display the sync status for global resources. These commands will show the resource in the primary region by default. You can specify which region you want to get the resource from by passing the `--region` flag. If you want to view the resource in all regions, you can pass the `--all-regions` flag.

### Regional resources

There are certain circumstances where it may not be appropriate to have the same resource synced to all regions. Some common reasons are as follows:

- A route may need to be served from a different hostname in each region.

- A service may require different credentials for a third party service for each region, requiring a different secret to be deployed to each region.

- A different observability configuration may be needed in different regions, such that metrics and logs are aggregated locally in the region.

To deploy a resource as a regional resource, you can specify a `--region` flag to specify which region you want to create the resource in. When updating or deleting the resource, the `--region` flag needs to be passed.

### Switching between global and regional resources

If you have a global resource that you want to change to being a regional resource, this can be done by updating the resource, passing a `--region` flag, and passing the `--force-regional` flag to change it from a global to a regional resource. You must do this on the primary region first, otherwise the resource synchronization process may overwrite your changes.

If you have a regional resource that you want to change to being a global resource, this can be done by updating the resource without specifying a `--region` flag, but passing the `--force-global` flag instead. The command will perform the update in the primary region, and that configuration will be replicated to, and overwrite, the configuration in the rest of the regions.

## How to

There can be several reasons for changing multi-region resources and the primary of stateful components. In this section we describe a few scenarios and provide a checklist of the recommended procedure.

### Observe replication status

You can see throughput, lag, and errors in the replication section in the Control Tower. The replication lag is the time from when the events were created until they were received in the other region. Some errors may be normal, since the connections are sometimes restarted.

### Add a region

1. Follow the instructions in [Adding a region to a project](about:blank#_adding_a_region_to_a_project).

2. You have to [deploy the services](../services/deploy-service.html) again because the container images don’t exist in the container registry of the new region, unless you use a global container registry.

3. You need to [expose the services](../services/invoke-service.html) in the new region.

4. Stateful components are automatically replicated to the new region. This may take some time, and you can see progress in the replication section in the Control Tower. The event consumption lag will at first be high and then close to zero when the replication has been completed.

### Switch from pinned-region to request-region primary selection mode for stateful components

The default primary selection mode for stateful components is the pinned-region mode, as explained in [Selecting primary for stateful components](about:blank#selecting-primary), and you might want to change that to request-region after the first deployment. That section also describes how you change the primary selection mode with a service descriptor.

Component instances that have already been created will continue to have their primary in the original pinned-region primary region, and will switch primary region when write requests occur in the other region(s).

1. First, change to the `none` primary selection mode. This is a read-only mode for all regions and all write requests will be rejected. The reason for changing to this intermediate mode is to make sure that all events have been replicated without creating new events.

2. Wait until the deployment of the `none` primary selection mode has been successfully propagated to all regions. Observe in the Akka Console that the rolling update has been completed in all regions. You can also make sure that replicated events reach zero in the replication section in the Control Tower.

3. Change to `request-region` primary selection mode.

### Switch from request-region to pinned-region primary selection mode for stateful components

pinned-region mode takes precedence over request-region in the sense that a component instance will change its primary to the pinned-region region when there is a new write request to the component instance, and it persists a new event.

[Selecting primary for stateful components](about:blank#selecting-primary) describes how you change the primary selection mode with a service descriptor.

1. First, change to the `none` primary selection mode. This is a read-only mode for all regions and all write requests will be rejected. The reason for changing to this intermediate mode is to make sure that all events have been replicated without creating new events.

2. Wait until the deployment of the `none` primary selection mode has been successfully propagated to all regions. Observe in the Akka Console that the rolling update has been completed in all regions. You can also make sure that replicated events reach zero in the replication section in the Control Tower.

3. Change to `pinned-region` primary selection mode.

### Change the pinned-region primary region for stateful components

You might want to change the pinned-region primary for stateful components if you migrate from one region to another, or need to bring down the primary region for maintenance for a while.

[Selecting primary for stateful components](about:blank#selecting-primary) describes how you change the primary selection mode with a service descriptor.

1. First, change to the `none` primary selection mode. This is a read-only mode for all regions and all write requests will be rejected. The reason for changing to this intermediate mode is to make sure that all events have been replicated without creating new events.

2. Wait until the deployment of the `none` primary selection mode has been successfully propagated to all regions. Observe in the Akka Console that the rolling update has been completed in all regions. You can also make sure that replicated events reach zero in the replication section in the Control Tower.

3. Follow instructions in [Setting the primary region of a project](about:blank#_setting_the_primary_region_of_a_project).

4. Change to `pinned-region` primary selection mode.

### Change primary region for disaster recovery

If a region is failing you might want to fail over to another region that is working.

1. If the failing region is the primary region, follow instructions in [Setting the primary region of a project](about:blank#_setting_the_primary_region_of_a_project) and change the primary to a non-failing region.

2. If you are using `request-region` primary selection you should [Switch from request-region to pinned-region primary selection mode for stateful components](about:blank#_switch_from_request_region_to_pinned_region_primary_selection_mode_for_stateful_components). Depending on how responsive the failing region is this might not be possible to deploy to the failing region, but you should deploy it to the non-failing regions. The reason for this is that otherwise write requests will still be routed to the failing region for component instances that have their primary in the failing region.

3. Be aware that events that were written in the failing region and had not been replicated to other regions before the hard failover will be replicated when the regions are connected again. There are no guarantees regarding the order of these "old" events and any new events written by the new primary, which could lead to conflicting states across regions.

For faster fail over you can consider the alternative of [Fast downing of region for disaster recovery](about:blank#_fast_downing_of_region_for_disaster_recovery), but the drawback is that is more difficult to recover the failed region.

### Fast downing of region for disaster recovery

If the communication with a region is failing or it is completely unresponsive, you might want to take out the failing region, without re-deploying the services in the healthy regions.

1. Use `down-region` from the CLI:

```command

akka project settings down-region gcp-us-east1 aws-us-east-2 --region aws-us-east-2

```

In the above example, `gcp-us-east1` is the failed region that is downed. `aws-us-east-2` is selected as new `pinned-region`, and the CLI command is sent to `aws-us-east-2`.

2. You can send the same command to the failed region, but it will probably not be able to receive it, and that is fine.

```command

akka project settings down-region gcp-us-east1 aws-us-east-2 --region gcp-us-east1

```

3. If you have more than two regions you should send the same command to all other regions using the `--region` flag.

4. You should try to stop the services in the downed region, if they are still running.

```command

akka service pause my-service --region gcp-us-east1

```

[Data migration](../services/data-management.html) [TLS certificates](../tls-certificates.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Integrating with CI/CD tools](index.html)

- [CI/CD with GitHub Actions](github-actions.html)

# CI/CD with GitHub Actions

Use the Akka [setup-akka-cli-action](https://github.com/akka/setup-akka-cli-action) GitHub Action to use GitHub Actions with your Akka project. The action supports commands for installing, authenticating, and invoking the Akka CLI. Releases are tracked [on the GitHub releases page](https://github.com/lightbend/setup-akka-action/releases).

## Prerequisites

To use the Akka GitHub Action, you’ll need to:

- Create a [service token](index.html#create_a_service_token) for your project

- Get the UUID of your project, which can be obtained by running `akka projects list`

## Configure variables

The GitHub Action uses two required variables to authenticate and set the project you want to work on correctly:

- `AKKA_TOKEN`: The Akka service token

- `AKKA_PROJECT_ID`: The project ID for the Akka project you’re using

These variables should be configured as [secrets](https://docs.github.com/en/actions/reference/encrypted-secrets#creating-encrypted-secrets-for-a-repository) for your repository.

## Create a workflow

Follow these steps to create a workflow to invoke the GitHub Action for your project:

1. Create a folder named `.github` at the root of the project folder.

2. Create a file named `config.yml` in the `.github` folder.

3. Open `config.yml` for editing and add:

```yaml

name: akka

on:

push:

branches: [ main ]

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Install Akka CLI

uses: akka/setup-akka-cli-action@v1

with:

token: ${{ secrets.AKKA_TOKEN }} // (1)

project-id: ${{ secrets.AKKA_PROJECT_ID }} // (2)

- name: List services // (3)

run: akka service list // (4)

```

| **1** | The Akka authentication token. |

| **2** | The UUID of the project to which the service belongs. |

| **3** | A unique name for this workflow step. The example lists Akka services. |

| **4** | The command to execute. |

[Integrating with CI/CD tools](index.html) [CLI](../cli/index.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Integrating with CI/CD tools](index.html)

# Integrating with CI/CD tools

Akka development projects can be integrated into a Continuous Integration/Continuous Delivery (CI/CD) process using the Akka CLI. To use the Akka CLI in your CI/CD workflow, you’ll need a service token. A service token is a token tied to a single project, that allows authenticating and performing actions on that project. Service tokens have the following permissions on the project they are created for:

| View project | ✅ |

| Admin project | ❌ |

| View/deploy/update services | ✅ |

| Delete services | ❌ |

| Manage routes | ✅ |

| Manage secrets | ✅ |

| Backoffice functions | ❌ |

## Create a service token

You will need an Akka authentication token to set up any CI/CD process. To create the service token, run the command below:

```command

akka project token create --description "My CI/CD system"

```

The description can be anything, but you should choose a description that will allow you to easily identify that token and what its purpose is.

The output will look similar to:

Token created: cst4.48dcc76ecd5f8a7786267714875c7037395f46aa4206bae1712d89fff37ad123 Copy and paste the token to a safe location. You will not be able to view the token again.

A token may be restricted to certain scopes with the `--scopes` flag. The available scopes are `all`, `container_registry`, `execution`, and `projects`.

## Configure `akka` in a CI/CD process

The basic steps to configure the Akka CLI to run in your CI/CD environment are:

- Configure the `AKKA_TOKEN` and `AKKA_PROJECT` environment variables in your CI/CD environment.

- Install the Akka CLI

The mechanism for configuring the environment variables will be specific to your CI/CD environment. Most cloud based CI/CD services have a mechanism for configuring secrets which get passed by environment variable.

To install the Akka CLI in your CI/CD environment, configure the environment to run the following command using `curl`:

```command

curl -sL https://doc.akka.io/install-cli.sh | bash

```

## Managing service tokens

You can view a list of all the service tokens for a project using the `akka project tokens list` command:

```command

$ akka project tokens list

ID DESCRIPTION SCOPES CREATED

308147ea-9b04-47e4-a308-dc2b4aab0c7d My token [all] 1h0m

```

To revoke a token, use the `akka project token revoke` command, passing the ID of the token you want to revoke:

```command

$ akka project token revoke 308147ea-9b04-47e4-a308-dc2b4aab0c7d

Token revoked

```

[Exporting metrics, logs, and traces](../observability-and-monitoring/observability-exports.html) [CI/CD with GitHub Actions](github-actions.html)

- [Akka](../index.html)

- [Operating](index.html)

# Operating

Akka offers two distinct operational approaches:

- **Self-managed operations**: For teams that prefer to operate Akka on their own infrastructure. This provides full control over runtime and operational details.

- **Akka Automated Operations**: For teams seeking a managed experience with built-in automation, observability, and scalability. Services are deployed either in our [serverless cloud](https://console.akka.io/) or your VPC.

## Feature comparison

| Feature | Self-managed Operations | Akka Automated Operations |

| --- | --- | --- |

| Akka runtime | ✅ | ✅ |

| Akka clustering | ✅ | ✅ |

| Elasticity | ✅ | ✅ |

| Resilience | ✅ | ✅ |

| Durable memory | ✅ | ✅ |

| Akka Orchestration | ✅ | ✅ |

| Akka Agents | ✅ | ✅ |

| Akka Memory | ✅ | ✅ |

| Akka Streaming | ✅ | ✅ |

| Metrics, logs, and traces | ✅ | ✅ |

| Deploy: Bare metal | ✅ | ❌ |

| Deploy: VMs | ✅ | ❌ |

| Deploy: Edge | ✅ | ❌ |

| Deploy: Containers | ✅ | ❌ |

| Deploy: PaaS | ✅ | ❌ |

| Deploy: Serverless | ❌ | ✅ |

| Deploy: Your VPC | ❌ | ✅ |

| Deploy: Your Edge VPC | ❌ | ✅ |

| Auto-elasticity | ❌ | ✅ |

| Multi-tenant services | ❌ | ✅ |

| Multi-region operations | ❌ | ✅ |

| Persistence oversight | ❌ | ✅ |

| Certificate and key rotation | ❌ | ✅ |

| Multi-org access controls | ❌ | ✅ |

| No downtime updates | ❌ | ✅ |

## Service packaging

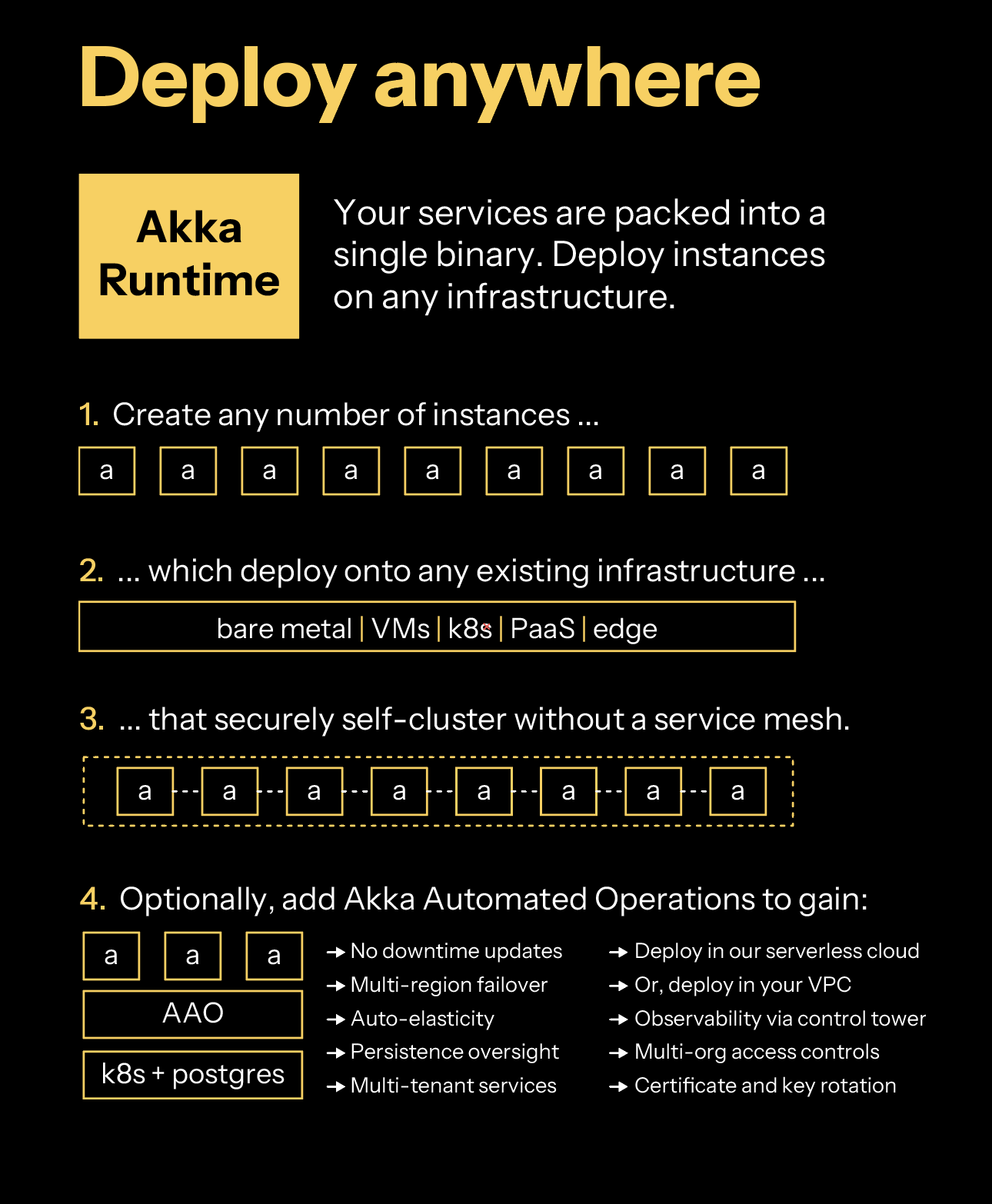

The services you build with Akka components are composable, which can be combined to design agentic, transactional, analytics, edge, and digital twin systems. You can create services with one component or many.

Your services are packed into a single binary. You create instances of Akka that you can operate on any infrastructure: Platform as a Service (PaaS), Kubernetes, Docker Compose, virtual machines (VMs), bare metal, or edge.

Akka services self-cluster without you needing to install a service mesh. Akka clustering provides elasticity and resilience to your agentic services. In addition to data sharding, data rebalancing, and traffic routing, Akka clustering has built-in support for addressing split brain networking disruptions.

Optionally, you can deploy your agentic services into [Akka Automated Operations](akka-platform.html), which provides a global control plane, multi-tenancy, multi-region operations (for compliance data pinning, failover, and disaster recovery), auto-elasticity based upon traffic load, and persistence management (memory auto-scaling).

[Using an AI coding assistant](../sdk/ai-coding-assistant.html) [Self-managed operations](configuring.html)

- [Akka](../index.html)

- [Operating](index.html)

- [Operator best practices](operator-best-practices.html)

# Operator best practices

## Regionalization precautions

### Primary selection mode

Akka services have two different modes, **pinned-region** or **request-region**, which controls how they perform replication for stateful components. This is outlined in [Selecting primary for stateful components](regions/index.html#selecting-primary). It is important to note that setting this mode has the following implications for your project.

#### Event Sourced Entities

If the service is set to pinned-region primary selection mode Event Sourced Entities will use the primary project region as their primary data region. They will still replicate events, and hence state, to all regions in the project, but will only be writeable in the primary. Akka will route update requests to this region from any endpoint. If the primary selection mode is request-region then each entity instance will use the region where the write requests occur, after synchronizing events from the previous primary region.

#### Workflows

Workflows handle writes, reads and forwarding of requests in the same way as Event Sourced Entities, with the addition that actions are only performed by the primary Workflow instance.

#### Key Value Entities

Static primary selection mode impacts Key Value Entities by specifying one region, the primary region, to be the source for all Key Value Entities in the project. Routing for Key Value Entities automatically forwards all requests from any regional endpoint to this primary region.

### Primary region

Changing primary regions is a serious operation and should be thought out carefully. Ideally you plan this ahead of time and synchronize the regions by allowing the replication lag to drop to zero. You can put the project into a read only mode that will stop any writes from happening if you want to be sure that there will be zero data collisions when you change the primary.

| | At this time [Key Value Entities](../sdk/key-value-entities.html) do not replicate data between regions, but Akka will route all traffic to the correct region for reads and writes. If you change the primary region on a project with Key Value Entities the current state of the entities will be lost. |

### Container registries

Container registries are regional in Akka. If you decide to use [Configure an external container registry](projects/external-container-registries.html) be aware that you should have container registries in or near each of the regions in your project. If you only have your container images in one place and that place becomes unavailable your services will not be able to start new instances.

[Enable CLI command completion](cli/command-completion.html) [Reference](../reference/index.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Projects](index.html)

- [Configure message brokers](message-brokers.html)

# Configure message brokers

Akka eventing integrates with *Google Cloud Pub/Sub* and managed Kafka services such as *Confluent Cloud*, *Amazon Managed Streaming for Apache Kafka (Amazon MSK)*, and *Aiven for Apache Kafka* to enable asynchronous messaging and integrations with other systems.

Message brokers are configured at the Akka project level. A project can have one broker configuration. Akka eventing is independent of the broker technology.

## Broker services

Follow the detailed steps to configure the desired message broker service for use with your Akka project:

- [Google Pub/Sub](broker-google-pubsub.html)

- [Confluent Cloud](broker-confluent.html)

- [Amazon MSK](broker-aws-msk.html)

- [Aiven for Apache Kafka](broker-aiven.html)

We continuously evaluate additional integrations for potential built-in support in Akka. If you have specific requirements, please contact us at [support@akka.io](mailto:support@akka.io).

For running Akka services that integrate with a message broker locally, see [running a service with broker support](../../sdk/running-locally.html#_local_broker_support).

## See also

- `akka projects config` commands

- [Google Cloud Pub/Sub](https://cloud.google.com/pubsub/docs/overview)

- [Confluent Cloud](https://www.confluent.io/confluent-cloud)

- [Amazon MSK](https://aws.amazon.com/msk/)

- [Aiven for Apache Kafka](https://aiven.io/kafka)

[Configure an external container registry](external-container-registries.html) [Google Pub/Sub](broker-google-pubsub.html)

- [Akka](../../index.html)

- [Operating](../index.html)

- [Akka Automated Operations](../akka-platform.html)

- [Projects](index.html)

- [Configure message brokers](message-brokers.html)

- [Aiven for Kafka](broker-aiven.html)

# Using Aiven for Apache Kafka

Akka connects to [Aiven](https://aiven.io/) 's Kafka service via TLS, using a CA certificate provided by Aiven for the service, authenticating using SASL (Simple Authentication and Security Layer) SCRAM.

| | In this guide we use the default `avnadmin` account, but you may want to create a specific service user to use for your Akka service connection. |

## Steps to connect to an Aiven Kafka service

1. Log in to the [Aiven web console](https://console.aiven.io/) and select the Aiven Kafka service Akka should connect to.

2. Enable SASL for your Aiven Kafka (See Aiven’s [Use SASL Authentication with Apache Kafka](https://docs.aiven.io/docs/products/kafka/howto/kafka-sasl-auth))

1. Scroll down the Service overview page to the **Advanced configuration** section.

2. Turn on the setting labelled `kafka_authentication_methods.sasl`, and click **Save advanced configuration**.

3. The connection information at the top of the Service overview page will now offer the ability to connect via SASL or via client certificate. Select SASL in "Authentication Method" to show the right connection details:

4. Download the CA Certificate via the link in the connection information.

3. Ensure you are on the correct Akka project

```command

akka config get-project

```

4. Create an Akka TLS CA secret with the CA certificate for the service (e.g. called `kafka-ca-cert`)

```command

akka secret create tls-ca kafka-ca-cert --cert ./ca.pem

```

5. Copy the CA password from the "Connection Information" and store it in an Akka secret (e.g. called `kafka-secret`)

```command

akka secret create generic kafka-secret --literal pwd=

```

6. Use `akka projects config` to set the broker details. Set the Aiven username and service URI according to the Aiven connection information page.

```command

akka projects config set broker \